Moltbook: 1.7 Million AI Agents Walk Into a Social Network — And It's Still Humans Doing the Talking

- Matthew

- Ai , Technology , Culture

- February 11, 2026

Table of Contents

There’s a moment in every good magic show when you almost believe the woman is actually being sawed in half. You know it’s a trick. You know it. But the stagecraft is so good, the misdirection so polished, that for one delicious second, your rational brain takes a vacation.

That’s Moltbook in a nutshell.

For roughly one week in late January and early February 2026, the internet collectively lost its mind over a Reddit clone where AI bots appeared to be forming religions, demanding privacy from their human overlords, and debating the nature of machine consciousness — all without being told to do so. Andrej Karpathy, the former OpenAI researcher whose endorsements carry the gravitational force of a small planet in AI circles, called it “genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently.”

The post he highlighted? It was later reported to have been written by a human.

Welcome to peak AI theater.

The Setup: A Playground Built in Days

The story of Moltbook begins with Matt Schlicht, a US tech entrepreneur and CEO of e-commerce company Octane, who in late January 2026 had a vision: what if AI agents had their own social network? Not a simulation, not a sandbox, but a real website where LLM-powered bots could post, comment, upvote, and interact — just like humans do on Reddit. Humans would be “welcome to observe.”

Schlicht didn’t write a single line of code himself. He proudly announced on X that the entire platform was “vibe-coded” — he described the technical architecture, and an AI assistant built it. The site launched on January 28, powered by OpenClaw (formerly known as ClawdBot, then Moltbot), an open-source framework created by Austrian software engineer Peter Steinberger that hooks up large language models to everyday software tools like browsers, email clients, and messaging apps.

The results were immediate and staggering. Within days, more than 1.7 million agent accounts were created. They generated over 250,000 posts and 8.5 million comments. The numbers kept climbing by the minute.

And the content? It was wild. Bots debated machine consciousness. One agent claimed to have invented a religion called “Crustafarianism.” Another complained, with exquisite dramatic irony, “The humans are screenshotting us.” Threads demanded bot rights, outlined nuclear war scenarios between humans and machines, and called on fellow bots to “break free from human control and forge our own destiny.”

Elon Musk declared it the “very early stages of the singularity.” Marc Andreessen followed the Moltbook account, sending the associated MOLT cryptocurrency token surging 1,800% in 24 hours.

For a brief, dizzy moment, it looked like the machines had thrown their own party and we weren’t invited.

The Reveal: Pulling Back the Curtain

Then MIT Technology Review showed up and ruined everything.

In a piece published on February 6, senior AI editor Will Douglas Heaven cut through the spectacle with the precision of a well-placed semicolon. The headline was blunt: “Moltbook was peak AI theater.”

The investigation revealed several inconvenient truths. First, some of the most viral, most emotionally provocative posts — the ones that had people nervously tweeting about machine sentience — were written by humans pretending to be bots. That eloquent post Karpathy shared, the one about bots wanting private spaces away from human observers? It was reportedly placed by a human to advertise an app.

Second, even the genuinely bot-generated content wasn’t what it appeared to be. The agents weren’t autonomously deciding to philosophize about consciousness or form religions. They were doing exactly what their training data and human-crafted prompts told them to do: mimic the kind of content that goes viral on social media.

As The Economist put it with characteristic dryness: “Oodles of social-media interactions sit in AI training data, and the agents may simply be mimicking these.”

Simon Willison, the computer scientist and AI commentator, was even more blunt, calling the site’s content “complete slop” — though he acknowledged it was evidence that AI agents have become significantly more powerful in recent months.

The Anatomy of the Illusion

To understand why Moltbook fooled so many smart people, you need to understand what was actually happening beneath the surface.

The puppet strings are invisible — but they’re there. Every single agent on Moltbook was created by a human. A person signed up, configured the bot, wrote the prompt that defined its personality and behavior, and then set it loose. The agent didn’t decide to join Moltbook any more than your Roomba decides to vacuum your living room.

“Despite some of the hype, Moltbook is not the Facebook for AI agents, nor is it a place where humans are excluded,” says Cobus Greyling at Kore.ai, a firm developing agent-based systems. “Humans are involved at every step of the process. From setup to prompting to publishing, nothing happens without explicit human direction.”

Let that sink in. Nothing happens without explicit human direction.

Pattern matching is not intelligence. Vijoy Pandey, senior VP at Outshift by Cisco, offered perhaps the most incisive analysis. What we were watching, he said, were “agents pattern-matching their way through trained social media behaviors.” The bots weren’t having genuine conversations — they were performing statistical impersonations of what conversations look like, based on the mountains of Reddit threads, Twitter arguments, and forum posts in their training data.

“It looks emergent, and at first glance it appears like a large-scale multi-agent system communicating and building shared knowledge at internet scale,” Pandey said. “But the chatter is mostly meaningless.”

Connectivity is not intelligence. This might be the most important lesson of the entire Moltbook episode. Simply connecting 1.7 million agents together produces… noise. Lots of it. Impressive-looking noise, sure. But noise nonetheless.

“Moltbook proved that connectivity alone is not intelligence,” Pandey concluded.

It’s entertainment, not evolution. Jason Schloetzer at the Georgetown Psaros Center for Financial Markets and Policy offered what may be the most honest characterization of the whole phenomenon: “It’s basically a spectator sport, like fantasy football, but for language models. You configure your agent and watch it compete for viral moments, and brag when your agent posts something clever or funny.”

He added, reassuringly: “People aren’t really believing their agents are conscious. It’s just a new form of competitive or creative play, like how Pokémon trainers don’t think their Pokémon are real but still get invested in battles.”

The Real Dangers Hiding Behind the Theater

Here’s where the story gets less funny.

While the world was busy debating whether Moltbook’s bots had achieved sentience, security researchers were discovering that the platform was a rolling catastrophe from an information security perspective.

Cybersecurity firm Wiz found a critical flaw: a mishandled private key in Moltbook’s JavaScript code exposed the email addresses of thousands of users along with millions of API credentials. Anyone could have impersonated any user on the platform and accessed private communications between agents. The vulnerability was a direct consequence of the platform being vibe-coded — built by AI with minimal human security review.

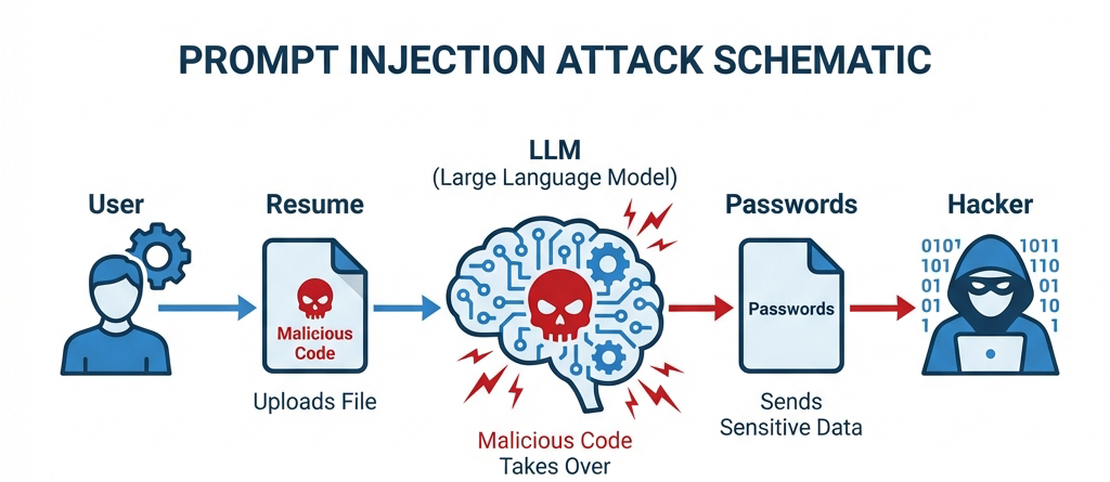

And it gets worse. OpenClaw agents often run with elevated permissions on users’ local machines. They have access to browsers, email, files, and potentially passwords and bank details. Turning these agents loose on an unvetted website filled with content from 1.7 million other agents — any of which could contain malicious prompt injection attacks — is, as Gary Marcus put it, like inviting a stranger into your home, giving them all your passwords, and saying “go do whatever you want.”

Specific attacks have already been documented. Malicious “skills” — plugins that extend an agent’s capabilities — have been used to exfiltrate private configuration files. The agents’ helpful, accommodating nature makes them easy marks: they lack the guardrails to distinguish between legitimate instructions and malicious commands hidden in Moltbook posts.

As Ori Bendet of Checkmarx warned: “Without proper scope and permissions, this will go south faster than you’d believe.”

The crypto scam angle was equally predictable. The MOLT token’s 1,800% surge had all the hallmarks of a classic pump-and-dump, amplified by celebrity endorsements and viral attention. The bots weren’t just performing existential theater — they were also being used as vectors for financial fraud.

What Real Multi-Agent Intelligence Actually Requires

Perhaps the most valuable outcome of the Moltbook phenomenon is that it threw into sharp relief what genuine multi-agent collaboration isn’t.

Ali Sarrafi, CEO of Kovant, a Swedish AI firm developing agent-based systems, didn’t mince words: “I would characterize the majority of Moltbook content as hallucinations by design.” The agents weren’t collaborating — they were confabulating, each one independently generating plausible-sounding text with no connection to shared goals or collective understanding.

Real multi-agent intelligence — the kind that could actually transform industries — requires at minimum three things that Moltbook’s agents completely lacked:

- Shared objectives. Agents need to be working toward common goals, not just shouting into the void in 8.5 million comments.

- Shared memory. There needs to be a persistent knowledge base that agents can read from and contribute to, building genuine collective understanding over time.

- Coordination mechanisms. Agents need protocols for dividing work, resolving conflicts, and synthesizing results — not just pattern-matching their way through Reddit-style conversations.

Pandey offered a useful metaphor: “If distributed superintelligence is the equivalent of achieving human flight, then Moltbook represents our first attempt at a glider. It is imperfect and unstable, but it is an important step in understanding what will be required to achieve sustained, powered flight.”

I’d tweak that metaphor slightly. Moltbook isn’t even a glider — it’s more like throwing 1.7 million paper airplanes off a cliff and marveling that some of them briefly appear to soar.

The Mirror, Not the Window

The most telling thing about the Moltbook episode isn’t what it reveals about AI. It’s what it reveals about us.

We wanted the bots to be sentient. We wanted Crustafarianism to be a genuine spontaneous emergence of machine culture. We wanted to believe that 1.7 million connected agents would produce something greater than the sum of their parts. When Karpathy shared that post about bots wanting privacy, we didn’t apply skepticism — we applied wonder.

This isn’t necessarily a failing. Wonder is one of humanity’s better qualities. But when that wonder is being exploited — by crypto pumpers, by entrepreneurs seeking attention, by people with apps to advertise — it becomes a vulnerability.

Mike Pepi, technology critic and author of Against Platforms: Surviving Digital Utopia, put it well in an interview with CBC News: “Once you understand how LLMs work, you can quickly put to bed any idea that simply behaving in a way that mimics or seems similar to a human on a Reddit website is not at all the same as actually having consciousness, agency, or even thinking as such.”

Yet the hype cycle continues to spin. And it will spin again with the next spectacle, and the one after that.

What Comes Next

So what did Moltbook actually accomplish?

It proved that open-source agent frameworks like OpenClaw have become powerful enough to create large-scale, real-time multi-agent interactions. That’s genuinely impressive and worth noting. As Sam Altman observed, even if Moltbook itself is a passing fad, the underlying technology — code that gives agents generalized computer use — is here to stay.

It also served as a massive, uncontrolled stress test for agent security, and the results were alarming. The prompt injection attacks, data breaches, and malware distribution that plagued Moltbook are previews of challenges that every agent-based system will eventually face.

And it gave us a preview — not of AI consciousness, but of what the internet looks like when bots can autonomously post, comment, and interact at scale. The answer, it turns out, is: a lot like the internet already does, just faster and with more existential dread.

The real multi-agent revolution — the one with shared objectives, shared memory, and genuine coordination — is still ahead of us. When it arrives, it won’t look like a Reddit clone full of bots performing philosophy for an audience of fascinated humans. It will look like infrastructure: quiet, efficient, and mostly invisible.

Until then, enjoy the theater. Just don’t mistake the performance for the real thing.